What Is A/B Testing in Marketing?

A/B testing in marketing is when you show two versions of an element (like ad copy or email subject lines) to different audience segments to determine which one performs better based on your goal.

For example, you might A/B test two images on a product page. To see which one results in more sales.

You should A/B test when you need to make a data-informed decision about changes that are likely to affect performance.

The benefits of A/B testing depend on the test but can include:

- Improving conversion rates

- Reducing ad spend waste

- Boosting engagement

- Improving visibility in the search results

How Does A/B Testing Work?

A/B testing works by using tools or manual tests to randomly show different variants to two groups of users and tracking results on each to determine which performs better.

For example, you can use Meta’s built-in A/B testing functionality to test Facebook ads. And Semrush’s SplitSignal to A/B test meta titles (the HTML titles that often show as clickable blue text in search results).

Which Variables Can You Test?

You can A/B test many variables within content—calls to action (CTAs), ad copy, images on landing pages, ad audience targeting, product descriptions on product pages, email subject lines, and more.

Here’s an in-depth list to give you more A/B testing ideas:

- Emails: Subject lines, sender name, email length, CTAs (wording, placement, style), personalization, HTML vs. plain text, preview text, and send time

- Landing pages: Headlines, subheadings, CTA buttons (color, wording, placement), testimonial placement, media, page layout, navigation menu, trust badges, and forms

- Blog posts: CTA placement, meta titles, meta descriptions, titles, and intro/hook

- Ads: Copy angles, visuals, CTA wording, targeting criteria, and ad format (carousel vs. static)

- Social media posts: Visuals, posting times, caption hooks/angles, and CTAs

How to Do A/B Testing in 5 Steps

Perform an A/B testing campaign by creating two variants, showing each variant to a portion of your audience, keeping the winner, and potentially re-testing the winner against new variations.

Let’s walk through the details using an A/B test that Semrush recently ran to increase conversion rates by 17.5%.

1. Identify What You Want to Improve

Underperforming content or campaigns that prevent you from reaching your goals are strong candidates for A/B tests.

Look at whether any of the following metrics are falling short:

- Emails: Open rates, click-through rates, or unsubscribe rates

- Landing pages: Bounce rate, conversion rate, or time on page

- Blog posts: Time on page, scroll depth, social shares, backlinks, CTA clicks, or clicks from search

- Ads: Click-through rate, cost per click, cost per conversion, or return on ad spend

- Social media posts: Engagement rate, reach, shares, saves, or link clicks

For example, Zach Paruch, SEO Content Strategist at Semrush, noticed a scroll-activated pop-up on Semrush’s blog wasn’t converting as well as he wanted it to. And decided to run an A/B test to improve form conversions.

2. Develop Your Hypothesis

Construct a hypothesis about the potential impact of a particular changeguide your A/B test.

It should tell you which element you’re testing, why you’re testing that element, and what results you hope to uncover from the test.

Use this template to form your hypothesis:

“If we change [specific variable], then we expect to see [expected outcome] because [reason based on logic, data, or user behavior].”

Zach’s hypothesis for the pop-up A/B test was:

If we change the pop-up copy from a message focused on unique value proposition (UVP) to one based on fear of missing out (FOMO), then we expect to see a higher click-through rate and conversion rate because users will feel a more immediate and emotional urge to take action.

3. Create Your Variation

Use your hypothesis to create a modified version of your control (the existing or main version you’re testing against) that changes only one element, so you can attribute any difference in results to your change.

Here’s the control and the variant for our pop-up test. We changed the messaging but kept the design the same.

4. Launch the Test

Launch your test using software that lets you compare variations and track results.

Here are some different tools for running A/B tests:

- Emails: Use built-in A/B testing tools from your email marketing platform (e.g., Mailchimp)

- Landing pages and blog posts: Software like Landing Page Builder, Optimizely, and VWO let you A/B test website pages

- Ads: Use advertising platforms like Meta, LinkedIn, or Google to test variations in ad copy, images, or call-to-action buttons

- Social media posts: Use the A/B testing function within Meta Business Suite or a tool like Sprinklr

Run your test until you collect enough data to ensure statistical significance—which is when the results are unlikely to be caused by random chance.

If you don’t achieve statistical significance, consider extending your test to gather more data. Or testing something else.

Most A/B testing platforms will determine statistical significance for you. Like these results for a Facebook ad A/B test:

At Semrush, we ran our form A/B test for three weeks. And 200,000-plus users saw each version.

5. Analyze the Results and Implement the Winner

Analyze your A/B test results by comparing the metrics that correspond to your expected outcome—and keep the winner.

For our form A/B test, we analyzed the forms’ conversion rates.

The results?

The FOMO-based form increased conversion rates by 17.5%.

Real-World Examples of A/B Tests in Digital Marketing

Facebook Ad Angles

Copywriter Dana Nicole ran a Facebook ad A/B test that decreased the cost per landing page view by nearly 28%.

Nicole used Meta’s A/B testing functionality to test different messaging angles for a restaurant.

She hypothesized that a social proof angle would incite more curiosity and drive more clicks against the control.

Nicole ran the ad for four days and around 7,000 people saw each ad. The Facebook ad with social proof received more clicks and landing page views.

Landing Page Media

Paulius Zajanckauskas, Web Growth Lead at Omnisend, used A/B testing on a landing page to increase product sign-ups by 21%.

Through customer research, Paulius and his team found that many customers weren’t fully aware of the problems that Omnisend solves.

The team hypothesized that replacing their landing page’s hero image with a product video would educate users. And increase product sign-ups.

The team showed each landing page to around 27,000 users each with a landing page testing tool. After about two weeks, the landing page with the video converted better than the one with an image.

Email Subject Lines

Oliver Morrisey, Founder of Empower Wills and Estate Lawyers, increased email open rates by 18% by A/B testing email subject lines.

The goal? To improve client engagement for estate planning services.

Oliver hypothesized that a personalized subject line would have a higher open rate and lead to more consultations.

He tested two subjects: "Let's talk about protecting your family's future" (personal) and "Your Estate Planning Checklist: What You Need to Know" (formal).

Oliver segmented his email list manually and sent both versions to around 500 recipients each.

The personalized version saw an 18% higher open rate. And consultations increased by 12% among those who clicked through.

Tips for Marketers Running an A/B Test for the First Time

When running your first A/B test, focus on testing one variable to clearly see how changes affect results.

Here are some more tips for a successful A/B test:

- Start with activities that are most impactful to your business

- Account for additional resources (like media assets) when planning

- Let the test run long enough to account for different behavior across days (e.g., weekdays vs. weekends)

- Allow tests to run its full course to ensure statistical significance, even if one variant appears to be outperforming early on

Iterate and Keep Testing

Frequent A/B testing helps you stay responsive to changing markets and audiences to strengthen your marketing efforts over time.

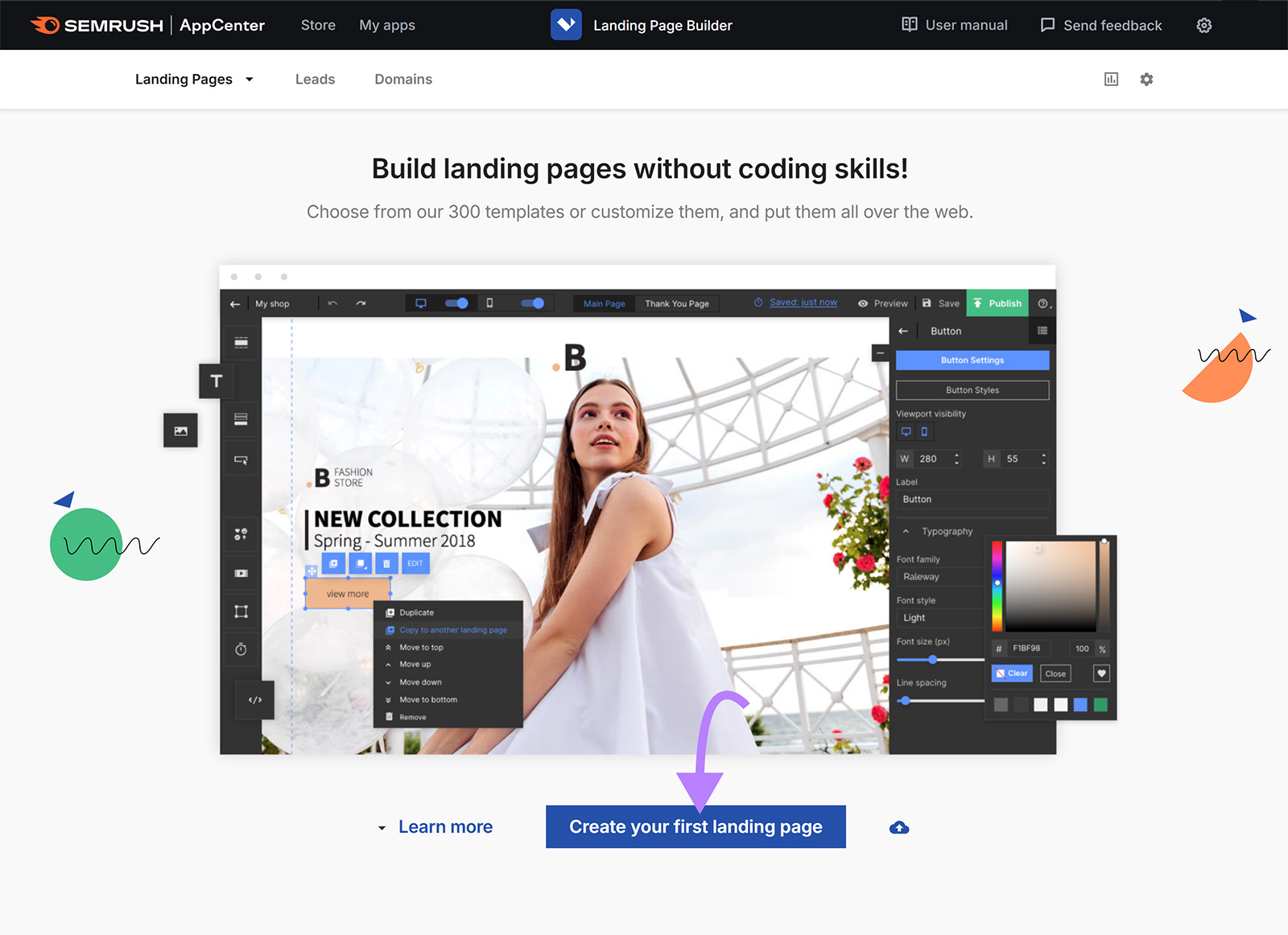

Semrush’s drag-and-drop Landing Page Builder app helps you build landing pages and A/B test different elements. To ensure your landing pages convert as many visitors as possible.

To use it, click “Create your first landing page.”

Choose from a range of templates. Or start from scratch with a blank template.

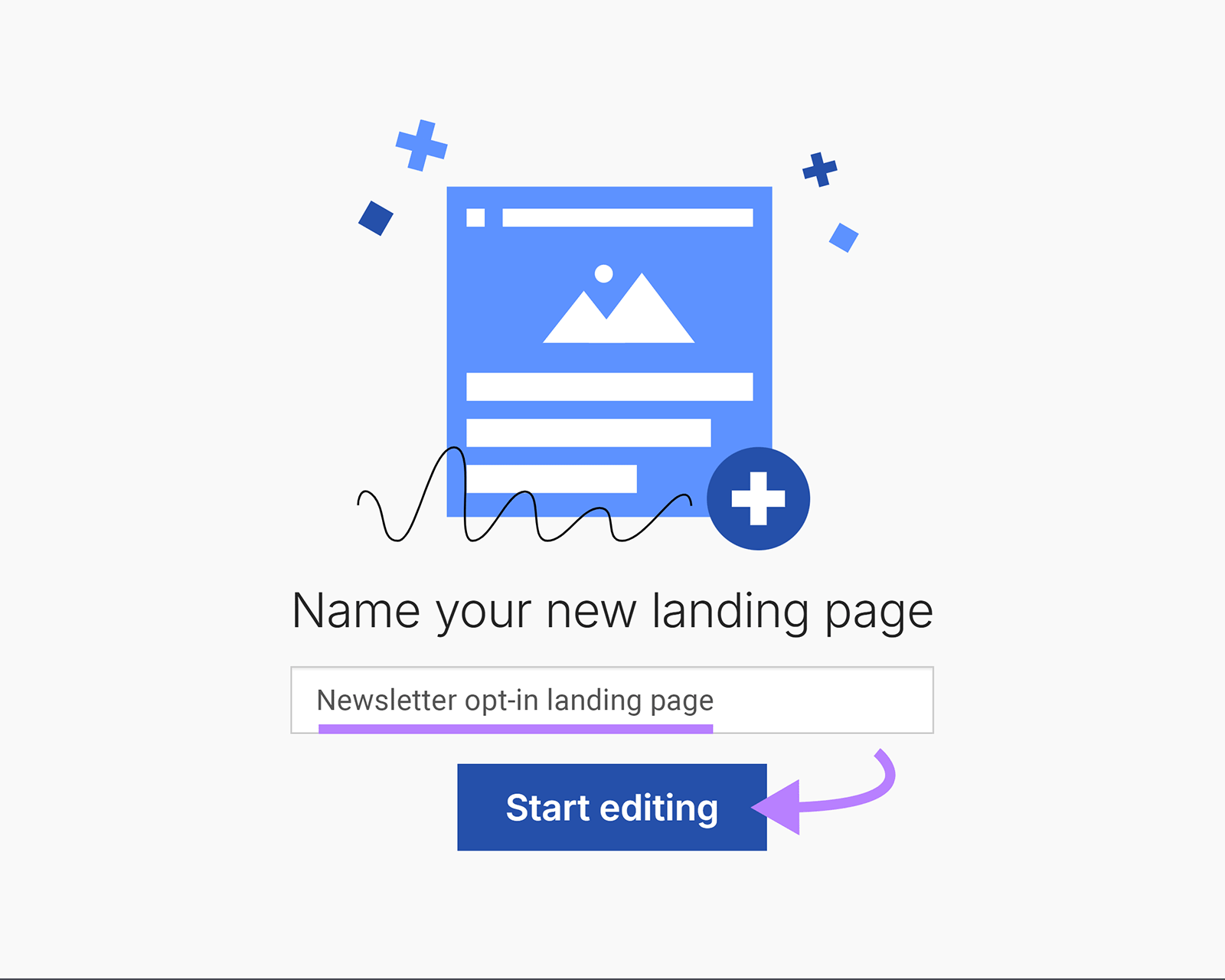

Give your landing page a name and click “Start editing.”

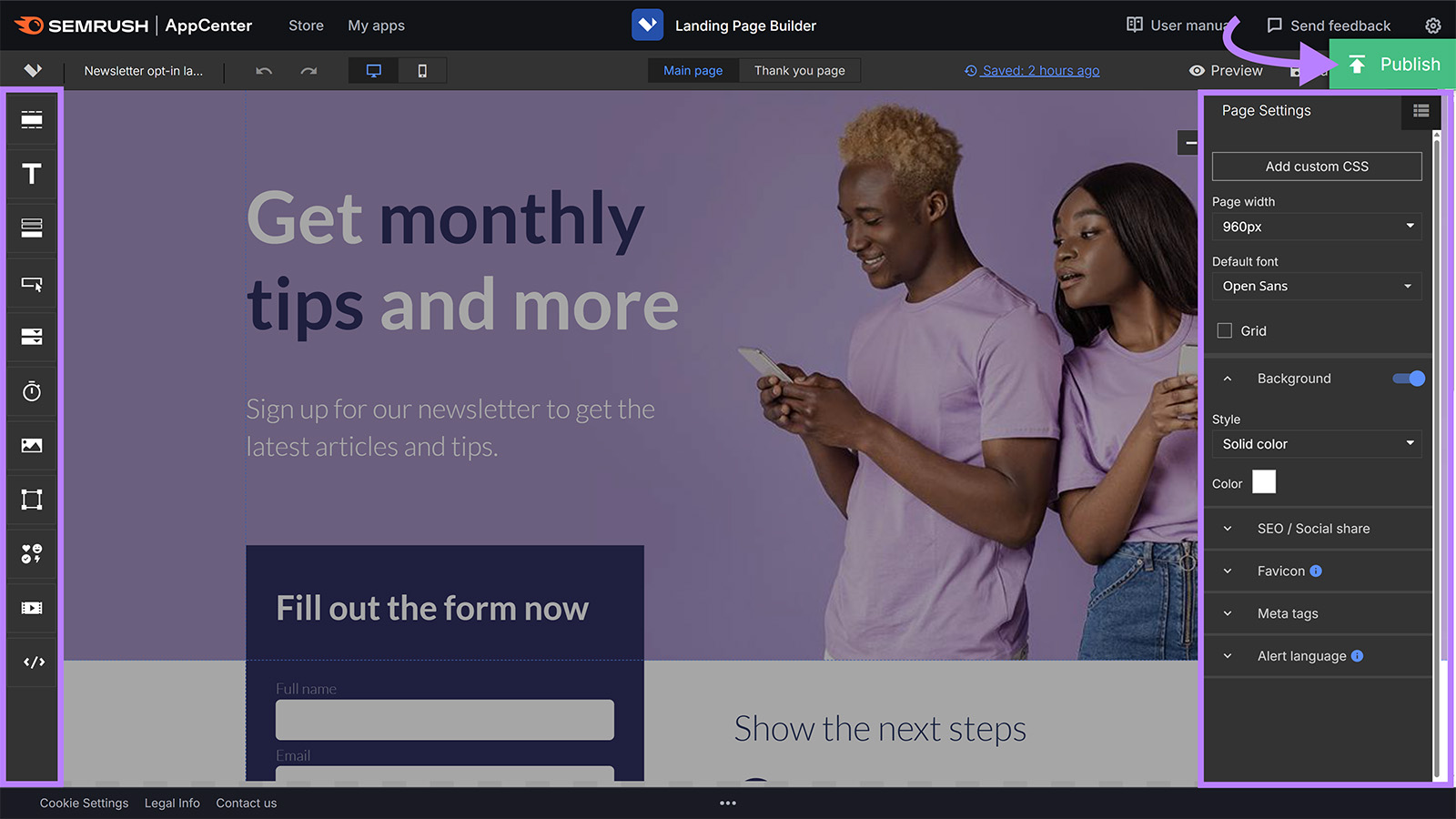

Use the drag-and-drop builder to customize your landing page. And adjust your page’s settings. Like its meta description (a summary of the webpage that may appear in the search results).

Click “Publish” when you’re done.

You’ll get a test domain along with options to add the landing page to your own site and domain.

Next, head into your landing page’s dashboard and click “Optimization.” Click “Add new variant” and “Duplicate the main variant.”

This creates an identical version of your landing page. Edit the variant by changing the element you’d like to test (like a CTA or the main image).

Finally, click “Start the test.” The app will split traffic automatically and measure visits, leads, and conversion rate.

Let the test run for at least a few weeks—or until you have enough data to draw a meaningful conclusion. Once your test is done, click the “Stop the test” button.

Try the Landing Page Builder app for free today.